Last updated: February 11, 2024.

Find out how many amps a TV uses in On and Standby mode in 2024. Get the amp draw breakdown by TV size and screen resolution. Plus, see how you can reduce your TV’s amp draw.

This article details TV amp draw results from a comprehensive study into the actual power consumption of over 107 TVs.

Key takeaways:

- Modern TV amp draw ranges from 0.08 amps to 0.98 amps, with 0.98 amps being the most common, and 0.49A being the average;

- On standby, modern TVs use between 0.0042 amps and 0.025 amps, with 0.01 amps being the average;

- The manufacturer’s listed amperage refers to the maximum amount of amps expected under normal operating conditions, which is typically 1A to 3A for a TV;

- TV amp draw increases with TV size, and screen resolution. But this is not necessarily the case when in standby mode; and

- Older TVs draw more amps (e.g. a 42 inch plasma TV uses close to 1.5A when on, and 0.15A in standby mode).

Pro tip: don’t try to measure TV amp draw with a standard multimeter – the prong tips are likely too small. I’m a qualified electrician, in my experience using the standard small prong tips to test amp draw creates an unnecessary risk of damage and injury. Simply use a wattmeter, and the TV Amp Calculator below.

Clarification note: it’s common for some readers to confuse the term “amps” with overall power consumption. So, just to clarify, amps (amperage) refers to the strength of electrical current being drawn by the TV. Overall power consumption refers to how many watts a TV uses. Watts is the product of amps and voltage.

How many amps does a TV use?

TVs use 0.49 amps on average.

Modern TV amperage ranges from 0.08 amps to 0.98 amps, with 0.98 amps being the most common.

These results are based on the actual power consumption of over 107 TVs with a 120V power supply.

Manufacturer listed amperage figures will be higher, as this refers to the maximum amount of amps that a TV is expected to use under normal operating conditions.

Based on manufacturer information, modern TVs typically have an amp draw that ranges from 1A to 3A.

Older TV, such as plasma TVs, old LCD TVs, and cathode ray tube (CRT) TVs, draw considerably more current.

For perspective, here are some brief amperage insights into older TVs:

- A relatively small 28 inch CRT TV uses close to 0.9A;

- An old 40 inch LCD flat screen TV draws nearly 0.8A; and

- A 42 inch plasma flat screen TV uses approx. 1.5A.

These were estimated based on manufacturer listed power ratings for TVs that were considered old even over a decade ago, when I was working on an energy estimation app for a leading utility company.

But let’s get back to more modern televisions to ensure you get the most relevant TV amp insights.

In general, as modern TV size increases so too does the amount of amps that they draw.

The table below lists how many amps modern TVs actually use, by size.

| TV size | Result category | Amps used |

| 19 inch TV | Average | 0.14A |

| Most common | 0.14A | |

| Lowest | 0.13A | |

| 24 inch TV | Average | 0.16A |

| Most common | 0.17A | |

| Lowest | 0.15A | |

| 32 inch TV | Average | 0.23A |

| Most common | 0.22A | |

| Lowest | 0.16A | |

| 40 inch TV | Average | 0.28A |

| Most common | 0.26A | |

| Lowest | 0.26A | |

| 43 inch TV | Average | 0.4A |

| Most common | 0.28A | |

| Lowest | 0.28A | |

| 50 inch TV | Average | 0.59A |

| Most common | 0.62A | |

| Lowest | 0.4A | |

| 55 inch TV | Average | 0.64A |

| Most common | 0.68A | |

| Lowest | 0.52A | |

| 65 inch TV | Average | 0.79A |

| Most common | 0.82A | |

| Lowest | 0.60A | |

| 70 inch TV | Average | 0.91A |

| 75 inch TV | Average | 0.95A |

| Most common | 0.98A | |

| Lowest | 0.73A |

As you can see, every 10 inch increase in size results in an average TV amp draw increase of 0.1A to 0.2A (figures are rounded).

Continue reading to see how many amps TVs use when in standby mode.

Screen resolution capability also impacts how many amps a television uses.

The table below lists how many amps TVs actually draw, based on maximum screen resolution capabilities.

| TV resolution | Result category | Amps used |

| 720p | Average | 0.21A |

| Most common | 0.17A | |

| Lowest | 0.13A | |

| 1080p | Average | 0.28A |

| Most common | 0.28A | |

| Lowest | 0.12A | |

| 2160p | Average | 0.67A |

| Most common | 0.98A | |

| Lowest | 0.29A |

On average, the higher the TV resolution, the higher the amp draw.

Again, these results are based on the actual power consumption of TVs (not the manufacturer’s listed amperage).

In addition to TV amps, we covered wattage and running costs as part of this TV electricity usage study, which may be of interest to you too.

Next, let’s look at how you can measure how many amps your TV uses.

How to measure TV amp draw

To test the amp draw of a TV, you need a multimeter or wattmeter (or similar device).

A wattmeter is advised as it reduces the risk of electric shock. But it’s worth noting that many models don’t display amperage, so be sure to find a model that does.

Alternatively, if you already have a wattmeter that doesn’t show the amp draw, use the TV Amps Calculator below to work out how many amps your TV uses.

For those more experienced working with live circuits, the following video shows how you can measure amp draw with a multimeter. And, it gives some useful safety information too.

One point that I’d like to highlight is that the multimeter used in the video accepts AC and DC in one setting.

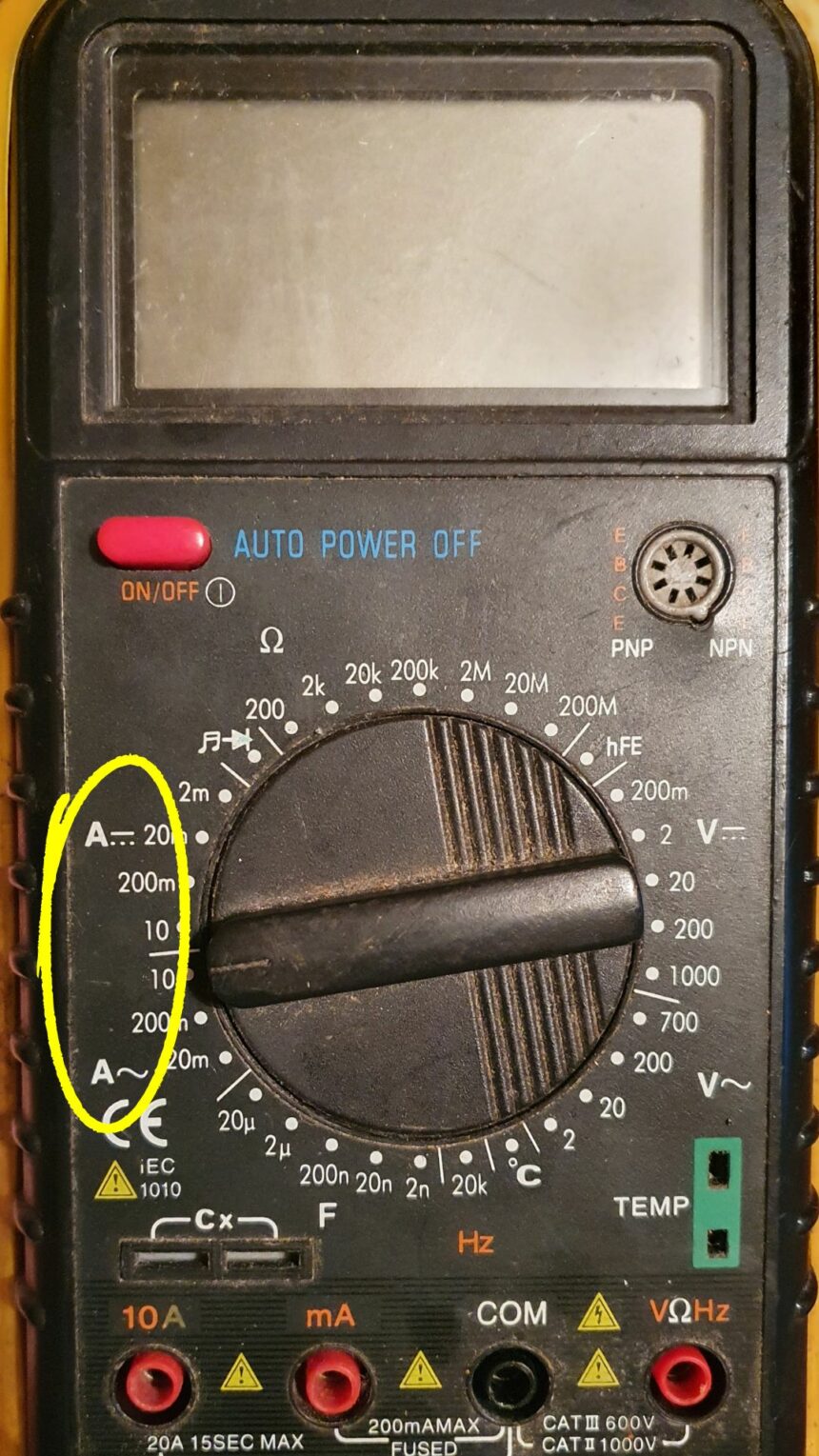

If you have an older multimeter like my spare one pictured below, remember to select the appropriate current type.

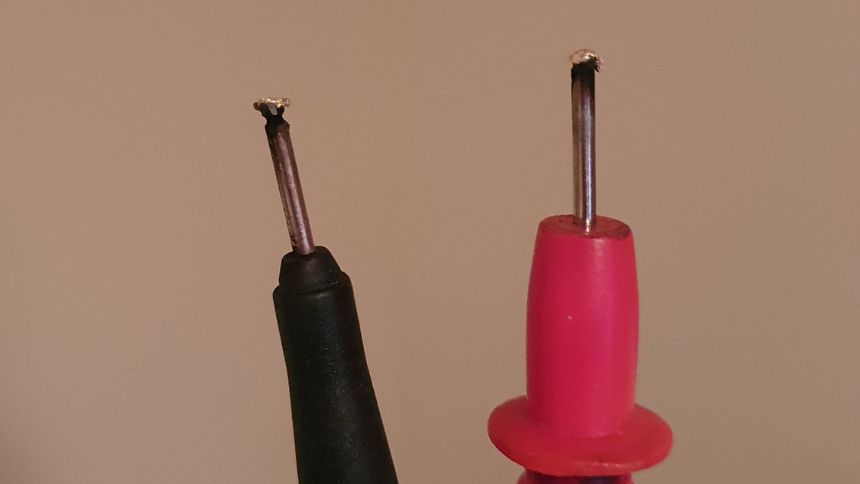

From my experience working with larger currents, and to reinforce a key safety point from the video, don’t use the tiny terminal prongs to test amperage.

They cannot handle large currents, something that I overlooked when working on an industrial circuit.

At this point, for safety reasons, I just expect that they cannot handle any level of current, even on household circuits.

Here’s a picture of my old multimeter leads:

As you can see, there’s quite a lot of damage and discoloration to the prong tips.

Granted, I was working in an industrial unit with larger currents.

But I think it’s best to err on the side of caution.

For this reason, I’ve decided not to list the specific steps that I would take when measuring how many amps a television uses.

Also, I’d expect similar damage to prong tips if testing the amperage of a TV without testing in series.

So, again, I’d recommend simply using a wattmeter (and calculating the amp draw using the formula below, if needed).

The result of this would be the actual amount of amps that a TV is using. This is particularly important to know when designing, and adding to an off-grid system.

But in most cases, the estimated TV current draw figures listed above, and the manufacturer’s listed amperage will be sufficient.

As mentioned, the manufacturer’s listed amperage is the maximum amount of amps that a television is expected to use – here’s where you can find this.

Where to find your TV’s amperage

The amperage for a TV is listed at the back of the unit, in the user’s manual and, in many cases, on the power cord (specifically, on the AC adapter).

The amp figure displayed is the manufacturer’s listed amperage, which is the highest expected under normal operating conditions

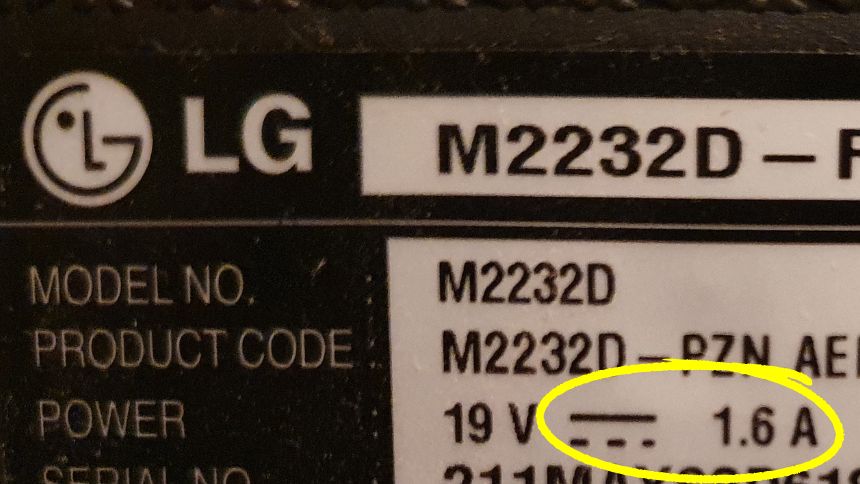

It’s important to note if the current is DC or AC, particularly for old models. Look for a symbol that has a continuous line above a dotted line – this indicates DC. A wavy line indicates AC.

For example, this TV requires input power that’s 1.6 amps DC.

Similarly, this information is also listed on the power cord. However, it’s listed as “Output Power.” The input power, in this case, refers to the power range that the cord is designed to convert (typically, 100V – 240V AC, 50/60Hz, 1-3A).

So now that we’ve covered where to find TV amperage, let’s take a brief look at how to work out TV amp draw.

How to work out how many amps a TV uses

To work out how many amps a TV uses, simply divide its wattage by your supply voltage.

Here’s the formula: I = P/V.

I is the current (measured in amps), P is the Power (measured in watts), and V is the voltage.

So, amps = watts / volts.

As mentioned previously, if the manufacturer’s listed wattage (i.e. the TV power rating) is used then the amp draw will be the maximum expected under normal operating conditions.

Measuring how many watts a TV uses, and then inputting this into the formula above, will tell you how many amps that TV is drawing at the specific time of testing.

And if you don’t want to do the math, use the following TV Amps Calculator.

TV amps calculator

Use this TV Amps Calculator to estimate how many amps a TV uses.

Simply enter how many watts a TV uses (or its listed wattage, depending on your intention), and the supply voltage.

For reference, the nominal mains voltage in the US is 120V, but some circuits are 230V, and in the UK it’s 230V. Also, 12V and 24V are common for those running a TV from a solar generator, in an RV, or off-grid in general.

Related: see how you can turn any TV into a solar powered TV (in 5 simple steps).

Please note: this calculator is intended for those designing circuits, particularly DC circuits. Skip to the next section to see a typical household AC circuit that powers most TVs. Inexperienced readers should consult a licensed electrician.

I just mentioned several voltage supplies, touched on the different currents, and noted the input and output TV power requirements, so things may sound a little complicated for some.

So, to simplify, let’s take a brief look at how the vast majority of TVs are powered.

The typical TV circuit (plus a key safety note)

TVs are generally powered by a 100V – 240V AC, 50/60Hz, supply delivered by a standard outlet from a typical circuit – circuit wired with 14 gauge cable, protected by a 15 amp breaker, is typical in the US.

For additional safety, TVs have an internal fuse on their power supply board, and some may also have a fuse in the power cord.

UK TV power cords have fuses that are typically 3A.

These days, it’s common to see TVs with power cords, similar to laptop chargers, that convert the incoming mains supply to the energy input required by the TV.

As a result, if you need to replace your TV power cable, it’s important to replace it with a cord of similar spec. Otherwise your TV’s power supply board could get damaged, or worse, cause a fire.

So, pay particular attention to the output power specs on the new cord before purchase.

Running a TV off-grid, on a generator for example, will result in a different power supply.

But as long as the overall supply is within the typical AC mains supply and the TV’s input power requirements, it should be safe, but best check with the manual or manufacturer just in case.

In addition to the TV user manual, and the power cord in many cases, TV input power requirements are typically listed clearly on the back of the device.

Now, at this point, we’ve covered how many amps a TV uses when running. But what about when they’re in standby mode?

How many amps does a TV use on standby?

On standby, modern TVs use between 0.0042A and 0.025A, with 0.01A being the average.

The amount of amps a TV uses in standby mode increases with screen size, in general.

The standby TV amp usage increase, however, is not as linear compared to when they’re powered on.

That being said, the general increase is no surprise as TV standby wattage also increases along with screen size.

The table below lists how many amps TVs use in standby mode, on average, by screen size.

| TV size | Amps used on standby |

| 19 inch TV | 0.0042A |

| 24 inch TV | 0.0065A |

| 32 inch TV | 0.0055A |

| 40 inch TV | 0.0042A |

| 43 inch TV | 0.0074A |

| 50 inch TV | 0.0172A |

| 55 inch TV | 0.012A |

| 65 inch TV | 0.0088A |

| 70 inch TV | 0.0042A |

| 75 inch TV | 0.0218A |

As you can see, the amount of amps a TV uses in standby mode increases, in general, as TV sizes increase. However, the increase is not totally linear. Some are simply more energy efficient than others.

Older TVs, as you’d expect, use more amps in standby mode than modern TVs.

Here’s a sample of old TV amperage results (again, these results were identified over 10 years ago from TVs that were even considered old at the time):

- A relatively small 28 inch CRT TV uses nearly 0.03A on standby;

- A 40 inch LCD flat screen TV draws close to 0.04A when in standby mode; and

- A 42 inch plasma flat screen TV uses a relatively substantial 0.15A on standby.

OK, now back to modern TV amps.

Interestingly, unlike when modern TVs are powered on, standby TV amp draw does not increase along with screen resolution capability.

The table below shows how many amps a TV draws in standby mode by screen resolution.

| TV resolution | Standby amps |

| 720p | 0.0052A |

| 1080p | 0.0042A |

| 2160p | 0.005A |

So, there’s no correlation between max TV resolution capabilities and amp draw in standby mode.

Now that we know how many amps a TV pulls in on and standby mode, and by TV size and resolution capability, let’s take a look at ways to reduce TV amp draw.

5 tips to reduce the amount of amps your TV draws

Here are 5 ways that you can reduce the amp draw of your TV:

- Reduce screen brightness. This reduces the amount of amps a TV uses because the overall energy energy usage decreases.

- Reduce sound levels. Similar to the previous point, reducing the volume reduces TV energy usage, which in turn, reduces the amount of amps a TV pulls.

- Lower the resolution. Lowering a TVs screen resolution setting reduces the amp draw when the TV is on. However, this is not necessarily the case when the device is in standby mode, so…

- Unplug your TV when it’s not in use. This will prevent any amp draw while the TV is in standby mode.

- Use the power-saving mode or the ENERGY STAR preset. This reduces the overall power consumption, and, therefore, amp draw.

Pro tip: some online sources suggest increasing the voltage supply to reduce TV amp draw. This is just theoretical, however (Ohm’s Law). Modern TVs have AC adapters that convert input power to the requirements of the specific TV. Increasing the voltage will either have no impact, or damage the device. Always make sure to meet the power requirements of your TV – stay within the power range of its AC adapter.

If you’re looking to make more considerable reductions in amp draw then you may need to upgrade your device to a more efficient or smaller model. Here are the most energy efficient TVs by size.

Next, let’s cover some frequently asked questions (FAQs).

FAQs

The following are FAQs identified during this TV amp draw study.

How many amps does a TV use per hour?

On average, TVs draw 0.49 amps at a single point in time. So, running a TV for one hour uses 0.49 amp-hours (Ah) or 490 milliamp-hours (mAh).

To work out how many amp-hours a TV uses, simply multiply its amp draw by the number of hours.

To work out the maximum expected amp-hours of a TV, use the manufacturer’s listed amperage instead of the actual amp draw.

For example, if a TV has a listed amperage of 2A, and we want to know how many amps it uses after running for 1 hour, we simply multiply 2 (the amperage) by 1 (the number of hours), and get 2. So the max expected amp-hours is 2.

To get the milliamp-hours (mAh), simply multiply this answer by 1,000.

How many amps does a 65 inch TV use?

65 inch TVs use 0.79A, on average.

The most common current draw for 65 inch televisions is 0.82A, and the lowest identified in the study was 0.6A.

The manufacturer’s listed amperage will be higher, typically 2A to 3A.

In standby mode, 65 in TVs draw an average of 0.0088A.

How many amps does a 70 inch TV use?

70 in TVs use 0.91A when on, and 0.0042A in standby mode, on average.

The maximum expected amperage (i.e. the manufacturer’s listed amperage) will be higher, typically 2A to 3A.

TV fuse rating

There can be 3 fuses protecting a TV, each with different ratings.

The fuse or breaker protecting the household circuit powering the TV, along with the other devices on the circuit, is typically 15 amps.

The fuse in the plug, more common in the UK, is typically 3A. And the fuse in the power supply board is typically 5A.

Different TVs and circuits can use different fuse sizes. So, when replacing a TV fuse, be sure to check the original fuse rating and replace it with the same sized fuse.

How many amps does a 32 inch LED TV use?

32 inch LED TVs use 0.23A, on average, but they most commonly use 0.22A.

The lowest 32 inch TV amp pull identified in the study is 0.16A.

In standby mode, 32 inch TVs draw 0.0055A (5.5 mA) on average.

The amperage listed by manufacturers of modern TVs within this size range is typically 1A – 2A.

Final thoughts

Knowing the manufacturer’s listed amperage is very important – this information is made readily available.

In addition to knowing the max amperage to expect, it’s important for some to know how many amps TVs usually draw.

However, this information is more difficult to find – solving this problem is the purpose of this article.

I hope you found this article useful. If so, I’m sure you’ll find this TV power consumption article useful too.

And don’t miss: How much does it cost to run a TV?, and Turn Any TV Into A Solar Powered TV: The Easy 5 Step Solution.

To get a snapshot of the highlevel results for all electrical units measured as part of this study, check out TV Electricity Usage | Most Cited Study [Results Snapshot].

And, to make considerable energy and cost savings quickly and easily, get this 6 Quick Wins Cheat Sheet:

-

PS5 Electricity Cost [5 Easy Cost Saving Tips]

How much electricity does a PS5 use? Find out here & get an hourly, weekly, monthly & annual cost breakdown. And see how you can easily reduce the costs & your carbon footprint.

-

Xbox Series X Electricity Cost [4 Money Saving Tips]

Get a cost breakdown of running the Xbox Series X per hour, month and year. And get 4 tips that’ll save you money and minimize your carbon footprint.

-

PS5 vs Xbox Power Consumption [Which Is Cheaper To Run?]

See how the power consumption of the PS5 compares with the Xbox Series X in different modes, and how they will impact electricity bills.

James F (not to be confused with ECS co-founder James) is our lead author, content & website manager. He has a BSc. in Digital Marketing, and a Diploma in IT. He became a qualified electrician while studying electrical engineering part-time.

From wind and solar photovoltaic installers, James F worked with many certified energy practitioners and energy consultants before joining the core ECS team. He also helped build the most downloaded energy saving app while working with a leading utility company.

Read more about James F or connect directly on LinkedIn, here.

![PS5 Electricity Cost [5 Easy Cost Saving Tips]](https://ecocostsavings.com/wp-content/uploads/2020/11/ps5-electricity-cost.jpg)

![Xbox Series X Electricity Cost [4 Money Saving Tips]](https://ecocostsavings.com/wp-content/uploads/2020/11/xbox-series-x-electricity-cost.jpg)

![PS5 vs Xbox Power Consumption [Which Is Cheaper To Run?]](https://ecocostsavings.com/wp-content/uploads/2020/11/ps5-vs-xbox-series-x-power-consumption-cost.jpg)